Customer research analysis is just a fancy way of saying you're digging into customer data to figure out what they actually need. For a founder, it’s not an academic exercise—it’s the process of finding the why behind what people do so you can shape your product, your marketing, and your entire GTM strategy.

Why Most Customer Research Falls Flat for Founders

Let's be direct. Most founders are drowning in data but have no real idea what to do with it. We were certainly in that boat at BillyBuzz in the early days. We had spreadsheets packed with survey responses and analytics dashboards full of blinking numbers, but none of it felt connected to the decisions we had to make every single day.

This is where most research efforts die. You get stuck between collecting a mountain of data and actually understanding what it all means. It's a classic trap that leads to building the wrong features and launching marketing campaigns that completely miss the mark.

From Vanity Metrics to Actionable Intelligence

When we first started, we were obsessed with vanity metrics—numbers that looked great in a pitch deck but told us nothing about whether customers found our product valuable. We’d celebrate every spike in sign-ups and website traffic, but we couldn't answer the questions that really mattered:

- Why were so many users bailing after just one week?

- What specific problem were our most loyal customers solving with our tool?

- Which features were just noise, and which ones were the absolute essentials?

Everything changed when we stopped just collecting data and started interrogating it. We moved from tracking what users were doing to digging deep into why they were doing it. That shift is the heart of effective founder-led analysis.

"Your biggest competitive advantage isn't your feature set; it's how deeply you understand your customer's problem. True customer research analysis gives you that understanding."

Getting this right isn't just a feel-good exercise; it directly impacts your bottom line. Forrester's 2025 Global Customer Experience Index found that CX quality is actually getting worse for many companies. A staggering 25% of U.S. brands saw their CX rankings fall in 2025, while only 7% improved. The report makes a direct link between better CX, lower churn, and higher revenue. You can dig into more of these findings on customer experience from Forrester.

The goal of this guide is to hand you our playbook for turning customer feedback into a strategic weapon. We’ll show you how to sidestep common mistakes like asking leading questions or ignoring qualitative feedback, so your research becomes the engine for real growth, not just another ignored spreadsheet.

Our Scrappy Playbook for Collecting Customer Data

You don't need a massive budget to get high-quality customer data. At BillyBuzz, we’ve pieced together a scrappy, low-cost system that gets us right to the heart of our customers' real problems. This isn't just theory—it’s the exact, battle-tested process we use every single day.

We treat data collection as an always-on activity. It’s a continuous loop focusing on three core methods: surgical social listening, lightweight surveys, and quick-fire interviews. Each one feeds the others, creating a constant stream of both qualitative and quantitative feedback that directly shapes our product decisions.

Hunting for Unfiltered Insights on Reddit

Forget waiting for feedback to come to you. Reddit is an absolute goldmine of unfiltered, honest conversations. We actively monitor specific communities where our ideal customers are already talking about their biggest headaches.

Our setup is simple but incredibly effective.

- Monitored Subreddits: We stick to hubs like

r/saas,r/startups, andr/smallbusiness. These are the digital watercoolers where founders and operators hang out, share their frustrations, and look for new solutions. - Keyword Alerts: We use our own tool, BillyBuzz, to pipe alerts directly into a dedicated Slack channel. The real magic isn't the tool, though—it's the keywords we track. We go way beyond just our brand name.

Here's our actual configuration for tracking customer sentiment and competitor mentions on Reddit.

BillyBuzz's Reddit Alert Rules

| Subreddit | Keyword Filters | Purpose |

|---|---|---|

r/saas |

"alternative to [competitor]", "frustrated with [competitor]", "hate that [competitor]" | Identify competitor weaknesses and churn signals. |

r/startups |

"how do you handle [pain point]", "recommend a tool for...", "software for [job-to-be-done]" | Discover unmet needs and new feature ideas. |

r/smallbusiness |

"[competitor] pricing", "is [competitor] worth it" | Monitor market sentiment and pricing sensitivity. |

This proactive listening gives us a real-time pulse on the market. It shows us where our competitors are dropping the ball and where the real opportunities are. Setting this up is surprisingly fast; check out our guide on how you can set up Slack alerts for Reddit mentions in 10 minutes.

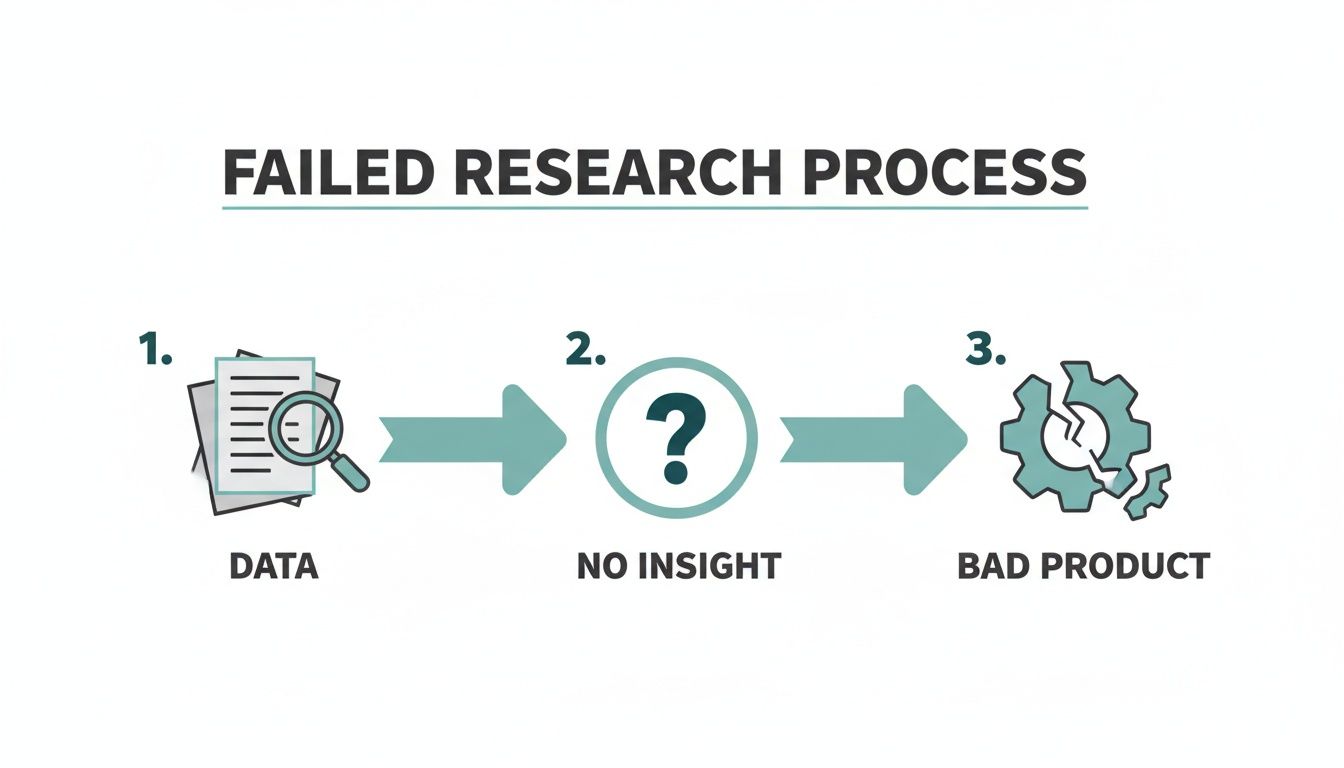

Without a solid process, data collection can quickly become a dead end. You end up with a pile of information but no clear path forward.

This simple flow illustrates the critical risk of just gathering data without a plan to analyze it, which almost always results in wasted effort and a product nobody wants.

Our 15-Minute Interview Framework

While online surveys are the most common way to get quantitative data—used by 85% of researchers—we get so much more from quick, informal qualitative chats. Direct conversations are still priceless for uncovering the why.

We aim for brief, 15-minute interviews with new users or even people who just mention a relevant pain point on Reddit. Our outreach is intentionally non-salesy and direct. Here's a template we use constantly:

Subject: Your comment on r/saas

Body: Hey [Name], saw your comment on r/saas about dealing with [pain point].

My co-founder and I are building a tool to solve that exact problem. Would you be open to a 15-min chat sometime this week to share your thoughts? No sales pitch, just want to learn from your experience.

Cheers,

[Your Name]

The goal isn't to sell; it's to listen. A 15-minute conversation with the right person is worth more than 100 survey responses from the wrong audience.

We use Tally.so for quick, free surveys to spot broader trends, but these direct interviews provide the rich context we could never get from a form. If you're looking to build out your own playbook, it's worth exploring these essential user research methods that help you get past the surface-level feedback.

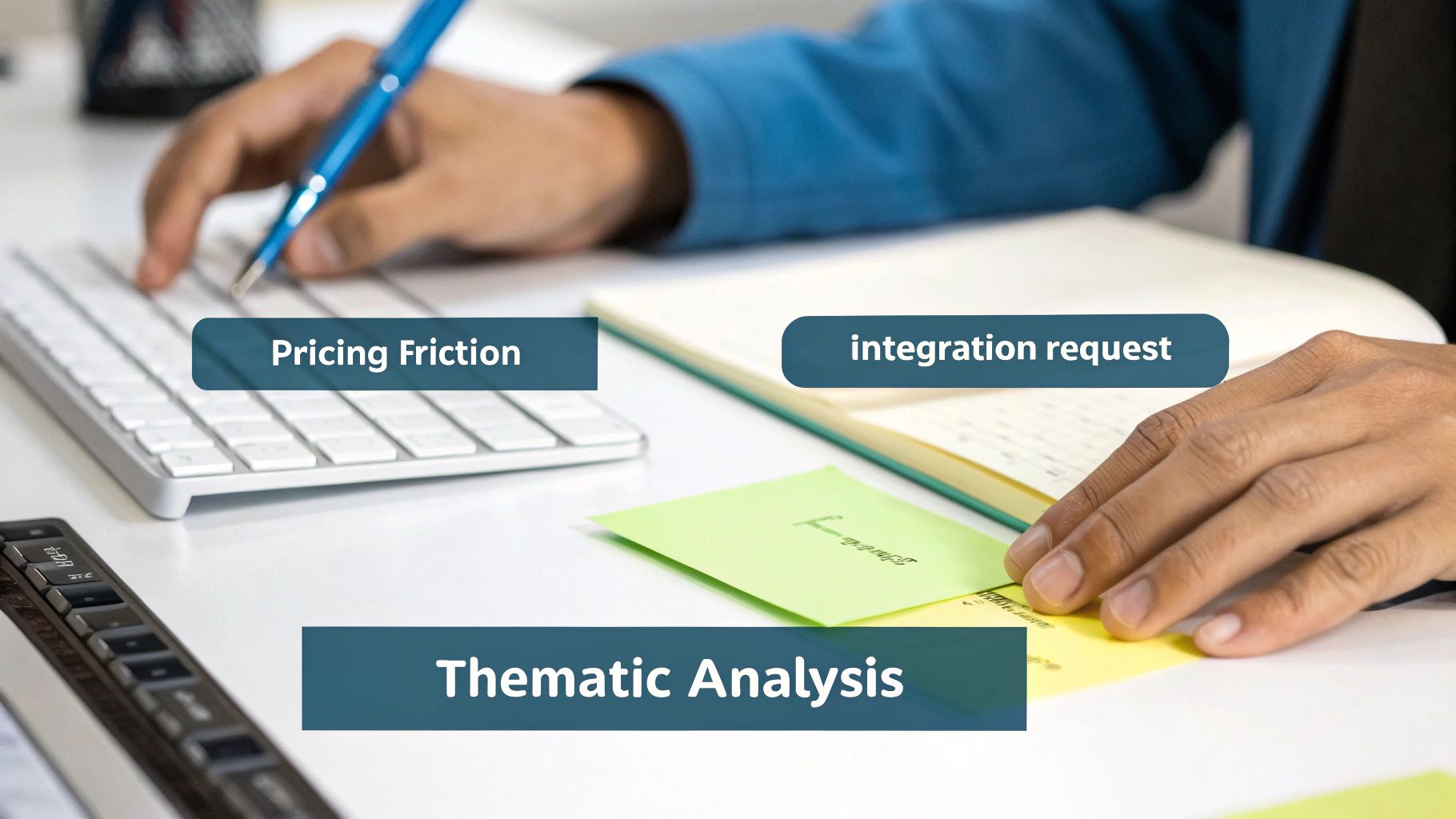

How We Turn Messy Feedback into Real Insights with Thematic Analysis

Anyone can collect a mountain of customer feedback. That's the easy part. The real challenge—and where the game-changing ideas live—is digging through it all to find the gold. At BillyBuzz, we use a simple but powerful process called thematic analysis to turn jumbled, unstructured notes from interviews and social media into a clear, prioritized action plan.

It’s really just a straightforward way of spotting meaningful patterns in what people are saying. Instead of getting lost in raw interview transcripts or endless comment threads, we break everything down into bite-sized, manageable pieces.

Coding Qualitative Data in a Simple Spreadsheet

You don’t need fancy, expensive software for this. Seriously. Our entire system for customer research analysis is built on a basic Google Sheet. Whenever we finish a 15-minute customer interview, we immediately drop the key quotes and our raw notes into the spreadsheet.

Then, we start "coding." It sounds technical, but it’s just a fancy term for tagging.

We read through each bit of feedback and slap a short, descriptive tag on it. This isn't some deep academic exercise; it's about quickly capturing the essence of a comment in just a few words.

For example, our initial tags usually look something like this:

- Pricing Friction: Any comment hinting at concerns about cost, value, or affordability.

- Missing Feature: When a user flat-out says, "I wish your tool could do X."

- Integration Request: Any mention of wanting BillyBuzz to connect with another app, like HubSpot or Slack.

- UX Confusion: When someone describes a part of our interface that was confusing or hard to use.

The secret to good coding isn't perfection; it's consistency. A simple, consistent tagging system you actually use is a million times more valuable than some complex framework that gathers dust.

From Simple Tags to Actionable Themes

Once we’ve coded a good batch of interviews and social media comments, we start hunting for clusters. This is where the magic happens. We'll filter our spreadsheet by different tags and see which ones keep popping up.

Suddenly, a bigger picture starts to emerge from the noise. For instance, we might see that 10 different users have feedback tagged with "Integration Request." Digging in, we notice five of them specifically mention "HubSpot" and another three mention "Salesforce."

These clusters are what we call themes. A theme isn't just a summary—it's an insight that points directly to a customer's job-to-be-done. In this case, the theme is crystal clear: "Our users need to push social listening leads directly into their CRM." This gives our product team a customer-validated priority to slot right into the backlog.

If you're curious about how this can be automated, it helps to understand some of the underlying NLP methods for social media keyword analysis, which explains how these patterns are identified at a much larger scale.

How We Classify Social Media Mentions on the Fly

For real-time mentions from Reddit and other social platforms, our system is even simpler. We need to know what’s urgent and what can wait. Every single alert we track gets sorted into one of four buckets:

- Positive: Someone is singing our praises or a competitor's customer is praising us. We track this for social proof.

- Negative: A user is reporting a bug, venting frustration, or complaining about a competitor. These get immediate attention.

- Neutral: A general mention that doesn't carry strong positive or negative feelings. We review these weekly.

- Question: A user is asking for help, trying to figure something out, or looking for a recommendation. These are sales or support opportunities.

This simple framework turns a chaotic firehose of notifications into an orderly, prioritized list of what our customers actually need. And while our manual process is great for getting qualitative depth, using AI feedback analysis tools can seriously speed things up as your volume of feedback grows.

Okay, you've done the hard work of collecting and analyzing all that customer feedback. Now what? The real magic happens when you turn those spreadsheets and interview notes into actual business decisions. This is where the rubber meets the road—translating what you’ve learned into a clear plan that can change your product or GTM strategy for the better.

At BillyBuzz, we've found that the best way to bridge this gap is by creating two key documents: lean user personas and actionable hypotheses.

This simple process ensures that the voice of the customer doesn't just get heard—it gets embedded directly into our product roadmap.

Create Lean Personas That Actually Help

Let's be honest, most user personas are a waste of time. No one cares if "Marketing Mary" owns a golden retriever. We need to know why she needs our product, not her weekend hobbies.

That's why we build lean, one-page personas focused entirely on what drives a user to act. Our template is dead simple and has just three parts:

- Core Job-to-be-Done: What's the fundamental goal this person is trying to accomplish?

- Key Motivations: What's pushing them to look for a solution right now? Think things like "losing leads to competitors" or "wasting hours on manual data entry."

- Biggest Pain Points: What specific roadblocks are getting in their way? This could be anything from "our current tools are way too clunky" to "I can't find quality conversations online."

This approach keeps our entire team laser-focused on the problems we’re here to solve. It gives us a shared vocabulary for talking about the people we're building for.

Turn Vague Ideas into Testable Hypotheses

An insight that doesn't lead to action is just trivia. Every key finding from your research needs to be framed as a testable hypothesis. Instead of a fuzzy idea like "add a CRM integration," we force ourselves to get specific.

We believe [building X feature] for [persona Y] will result in [Z outcome].

This structure is a game-changer. It connects a proposed feature directly to a business goal and a specific customer segment, turning a vague request into a strategic bet we can actually measure.

Here’s a real-world example straight from our backlog:

- Hypothesis: We believe building a native HubSpot integration for our 'Scrappy Marketer' persona will reduce churn by 5% because our research shows they currently spend 30 minutes per day manually exporting leads.

Suddenly, you're not just comparing features against each other; you're comparing their potential business impact. The stakes are high, too. Research shows that over half of all customers will jump ship after just one bad experience. And on the flip side, customers are 2.4 times more likely to stay loyal if you solve their problems quickly. Getting this right matters.

Prioritize with a Simple Impact vs. Effort Matrix

Once you have a list of well-defined hypotheses, the next step is deciding what to build first. This is where a simple impact/effort matrix comes in handy. We just drop all our hypotheses into a table and score each one on two simple scales from 1 to 5:

- Impact (1-5): How much do we think this will move the needle on our key metrics? (e.g., activation, retention, revenue).

- Effort (1-5): How complex will this be for the team to build? How much time and resources will it take?

This gives us a clear, visual way to spot the low-hanging fruit—those high-impact, low-effort ideas that give us the most bang for our buck.

Here’s a simplified version of what this might look like:

Hypothesis Prioritization Framework

| Hypothesis | Estimated Impact (1-5) | Estimated Effort (1-5) | Priority Score |

|---|---|---|---|

| Build native HubSpot integration | 5 (High) | 3 (Medium) | High |

| Redesign user onboarding flow | 4 (High) | 2 (Low) | Highest |

| Add dark mode to the UI | 2 (Low) | 1 (Very Low) | Low |

| Launch a community forum | 4 (High) | 5 (Very High) | Medium |

This simple exercise is the final, crucial step in our process. It’s how we turn a mountain of raw customer data into a smart, focused, and customer-driven product roadmap. You can also explore how AI improves customer feedback integration to see how new tools are making this even easier.

Common Mistakes to Avoid in Customer Analysis

Believe me, we’ve made every mistake in the book so you don’t have to. The road from raw customer feedback to a smart business decision is riddled with potholes. A solid customer research analysis process is your best defense against wasting months building something nobody actually wants.

The first and most dangerous trap is confirmation bias. This is our natural, human tendency to hunt for data that proves what we already believe to be true. Early on at a previous startup, we were absolutely convinced a certain feature would be a massive hit. We cherry-picked the two customers who raved about the idea and conveniently ignored the ten who were completely indifferent.

Needless to say, that’s how you end up building features in a bubble, totally disconnected from what your market is telling you.

Drowning in Data but Starving for Decisions

Another pitfall I see all the time is analysis paralysis. It’s incredibly easy to get so buried in spreadsheets, charts, and interview transcripts that you never actually come up for air to make a decision. You just keep digging for one more data point, hoping for that perfect, risk-free answer that, frankly, doesn't exist.

We learned the hard way that good-enough data acted upon today is infinitely more valuable than perfect data that arrives a month too late. You have to set a hard deadline for your analysis and force a decision. The goal isn't to eliminate all uncertainty; it's to gather just enough confidence to take the next calculated step forward.

As a founder, you cannot outsource understanding. Staying deeply involved in customer research analysis is non-negotiable. It’s how you develop the intuition that guides your biggest strategic bets.

Avoiding Common Data Imbalances

Finally, watch out for imbalances in the data you're collecting and how you weigh it. Most teams I've worked with tend to fall into one of two camps, and both are equally flawed.

Relying only on quantitative data: These are the teams that obsess over survey scores and analytics dashboards. They see the "what" but completely miss the rich context—the why—that only qualitative feedback can provide. A dropping NPS score is a signal flare, but a real customer interview tells you the story behind the number.

Listening only to the loudest customer: This is the polar opposite problem. You end up building your entire roadmap around the passionate, detailed demands of a single power user, completely forgetting they might not represent your broader customer base at all.

We stumbled right into the "loudest customer" trap with an early integration request. We poured weeks of development time into building it for one very vocal user, only to discover that almost no one else ever touched it. Your analysis has to balance the depth of individual stories with the breadth of wider trends. That's the only way to paint a complete and accurate picture.

Common Questions We Get About Customer Research

Other founders often ask us how they can actually pull off customer research analysis without it turning into a massive, all-consuming project. It's a fair question. Here are the most common things we get asked and our straight-up answers based on how we run things at BillyBuzz.

How Much Time Should a Small Team Actually Spend on This?

For a small startup just getting started, aim for a dedicated block of 3-4 hours every single week. The key here is consistency, not trying to boil the ocean once a quarter.

We have a "Feedback Friday" at BillyBuzz. One of us carves out that time to do nothing but go through new survey replies, check social media mentions, and read over interview notes. Making it a regular habit means the customer's voice is always part of our weekly planning, not some big report we only look at when things go wrong.

What’s the Best Free (or Really Cheap) Tool to Get Started?

Honestly, don't get hung up on the tools at first. The goal is to build the habit of listening, not to build the perfect, expensive tech stack. We ran our whole research process for less than $50 a month during our first year.

You can get surprisingly far with a simple, free setup:

- For Surveys: Google Forms or Tally.so work perfectly.

- For Thematic Analysis: A simple Google Sheet is all you need.

- For Social Listening: Set up a free alert tool to track keywords on Reddit.

The most powerful tool you have is genuine curiosity. Everything else is just there to support it.

How Do I Get My Technical Co-Founder to Care About This?

You've got to speak their language: data and risk reduction. Pitch customer research analysis as the single best way to de-risk your product roadmap. It’s about preventing wasted engineering time on features nobody wants.

Instead of saying, "we need to understand our users," try this: "This analysis will give us the data to make sure we don't build features nobody will pay for."

Even better, show them, don't just tell them. Share a powerful quote or a short video clip from a customer interview. When a technical founder hears the pain point directly from a frustrated user, it's no longer a vague marketing idea. It becomes a real, tangible problem they’ll actually get excited about solving.

Ready to stop guessing and start listening? BillyBuzz uses AI to find your next customers on Reddit, delivering real-time alerts on conversations about your competitors and your customers' biggest pain points. Find your first leads today.